LLMs continue the tradition of art’s preoccupation with authorship and authenticity

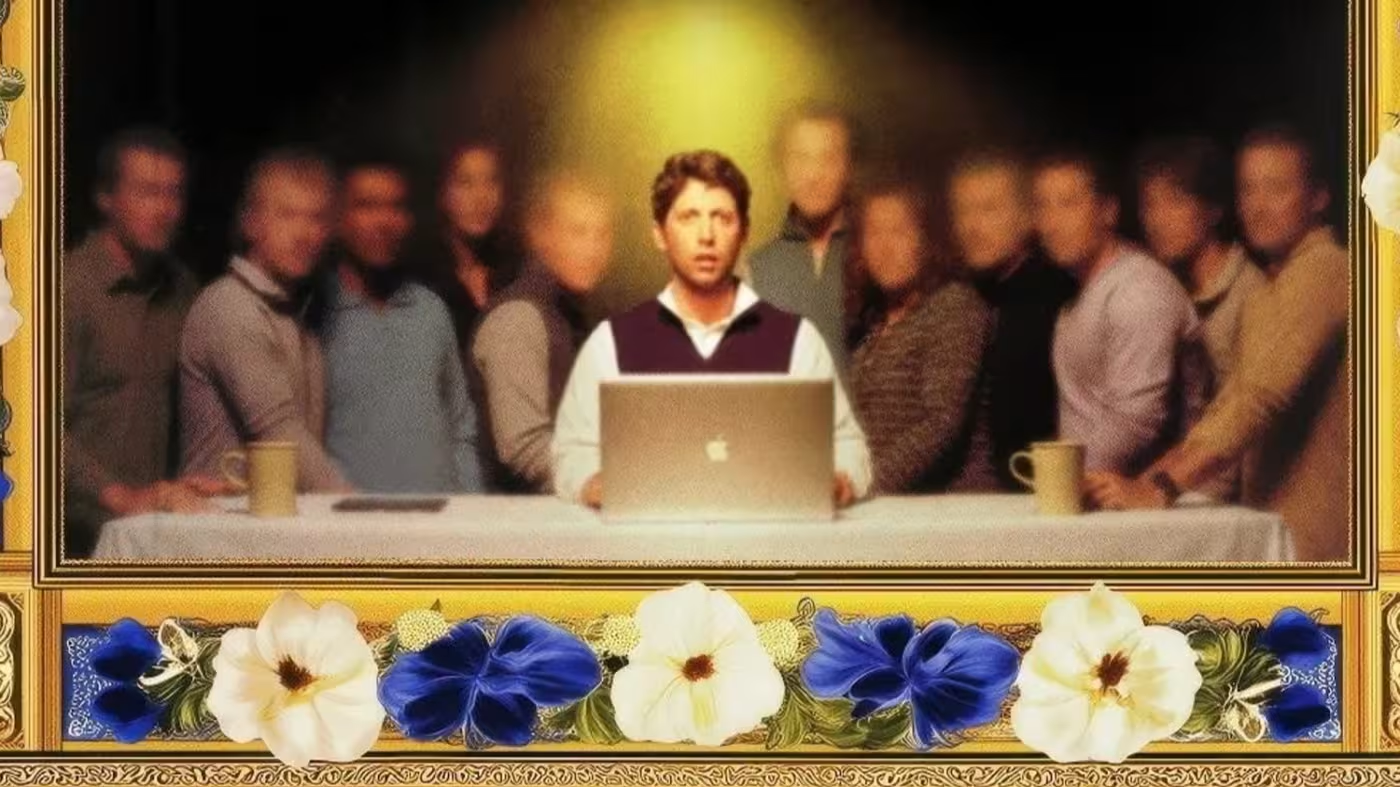

A poster for ‘Doomers’ by Matthew Gasda, who enlisted the help of AI for research. History shows tech can be an aid rather than a replacement © Doomers

The writer is a language architect at Microsoft and co-producer of ‘Doomers’, which opens in London this month

When Doomers launched in New York last spring there was an air of unease among some in the audience.

The play, which tells the story of Sam Altman’s struggle for control of OpenAI, was created in part by consulting generative artificial intelligence chatbots ChatGPT and Claude. When they found these entities credited in the programme, New York audiences were unsettled. How authentic is a play that relies, even in part, on AI?

The speed with which AI has permeated art is unnerving because of the sacrosanct nature of creativity. But more writers are experimenting with large language models as a way to play around with authorship. Last year, the novelist Curtis Sittenfeld asked ChatGPT to write a short story and used the same prompts to create a story of her own. She then asked readers to vote on which version seemed more authentic (she proclaimed the AI version too boring to finish).

Writing as Aidan Marchine, the novelist Stephen Marche used three different AI models to write the novella Death of An Author in 2023. “I am the creator of this work, 100 per cent,” he said. “But, on the other hand, I didn’t create the words.”

Art has long been preoccupied with questions of origin, plagiarism and intent. In Jorge Luis Borges’s 1939 story Pierre Menard, autor del Quijote, the main character immerses himself in the life and work of Miguel de Cervantes, attempting to arrive at Quixote himself, as if anew. The narrator says that the few chapters of work Menard writes “coincide” with Don Quixote “word for word and line for line” — yet they have different meanings because they were written at a different time and so draw on modern contexts. The nature of language has upturned the project.

Later, the American philosopher John Searle flipped the conundrum with his Chinese Room thought experiment. He imagined himself following a computer program and manipulating a set of Chinese characters into coherent sentences, according to a set of rules. To someone fluent in Chinese, Searle’s creation would make perfect sense, as if it had been written by a native speaker — but he himself would have no idea of its meaning. By extension, a computer program can appear to recognise language without any real understanding.

Does art derive its meaning from the artist’s effort — or does its meaning reside in the finished work, regardless of how it was produced? Doomers’ playwright, Matthew Gasda, didn’t employ AI to write his script but he did enlist its help in research and editing. Chatbots walked him through the preoccupations of the Californian tech subculture — the job titles, philosophical debates and legal fights. And they improved his writing process, catching reliance on clichés and repeated words.

Does this mean AI will replace writers? Terrified at its encroachment on their livelihoods, Hollywood’s writers went on strike in 2023. They eventually settled with an agreement that they must have a say in whether to use generative AI. If it is used, it will be disclosed and writers will still get credit and compensation. That’s a good start for AI usage in the creative process.

When Doomers finished its New York run, we conducted our own experiment. We trained a model on Gasda’s work and asked it to write an alternative version of the play. It was elegant but quickly descended into incoherence. Our conclusion was that AI is best left as a research tool.

History shows that advanced tech can be an aid rather than a replacement. Computers began beating humans at chess in the 1990s but instead of destroying the game their approach enriched it, with grandmasters learning to use IBM’s Deep Blue as a sparring partner.

Concerns about AI in creative work are legitimate. But instead of freezing with fear we should be running more experiments, at least for now.

Source: https://www.ft.com/content/d53ceff5-3f92-4450-814d-f3590a4e33b6